How to build a simple SMS spam filter with Python

A beginner-friendly tutorial using nltk, string and pandas

Beginner-friendly tutorial using nltk, string and pandas.

What if I told you there’s no need to build a fancy neural network to classify SMS as spam or not?

Currently, the internet offers a variety of complex solutions with Random Forest, Pytorch and Tensorflow — but are these really necessary if a few “for” loops and “if” statements can achieve a very satisfying result?

In this tutorial, I will show you an easy way to predict whether a user-provided string is a spam message or not.

Step 1: We’ll load a dataset.

Step 2: We’ll pre-process the content of each SMS with nltk & string.

Step 3: We’ll determine which words are associated with spam or ham messages and count their occurrences.

Step 4: We’ll build a predict function returning a ham or spam label.

Step 5: We’ll collect user-provided input, pass it through the predict function and print the output.

Video Tutorial of How to Build a Simple SMS Spam Filter

Step 1: Loading the Dataset

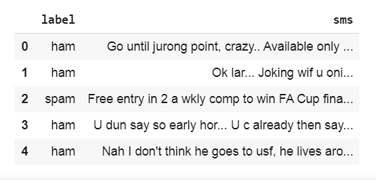

First, we need a neath dataset that would hold a great number of spam and ham messages with their corresponding label.

I‘ll be using the SMS Spam Collection v. 1 dataset by Tiago A. Almeida and José María Gómez Hidalgo, which can be downloaded from here.

- If you’re using Jupyter Notebook, save the text file in the same directory as the notebook file.

We’ll load the data file using the pandas .read_csv() method and display the first 5 values to see how our dataset looks like.import pandas as pddata = pd.read_csv('SMSSpamCollection.txt', sep = '\t', header=None, names=["label", "sms"])data.head() - if you’re using Google Colab, save the text file to your Google Drive and connect it to your notebook before you proceed with the above steps.

Ensure you replace the file_url string with the location on your own drive.from google.colab import drive

import pandas as pddrive.mount(‘/content/drive’)file_url = '/content/drive/My Drive/Colab Notebooks/SMSSpamCollection.txt'data = pd.read_csv(file_url, sep = '\t', header=None, names=["label", "sms"])data.head()

In both cases, we can take a peek at our dataset and start thinking about which transformations we’ll need to perform on its’ content.

Step 2: Pre-Processing

We’ve loaded our dataset, but now we need to tailor it to our needs.

We’ll perform the following transformations on each of the messages:

- Capital Letters: we‘ll convert all capital letters to lowercase letters.

- Punctuation: we’ll remove all the punctuation characters.

- Stop Words: we’ll remove all the frequently used words such as “I, or, she, have, did, you, to”.

- Tokenizing: we’ll tokenize the SMS content, resulting in a list of words for each message.

These can be easily achieved by using the nltk and string modules.

We’ll load our stopwords and punctuation and take a look at their content.

Please note, the results of the print statement are displayed after “>>>” in the code blocks below.import string

import nltk

nltk.download('stopwords')

nltk.download('punkt')stopwords = nltk.corpus.stopwords.words('english')

punctuation = string.punctuationprint(stopwords[:5])

print(punctuation)>>> ['i', 'me', 'my', 'myself', 'we']

>>> !"#$%&'()*+,-./:;<=>?@[\]^_`{|}~

Now we can start defining our pre-processing function, resulting in a list of tokens without punctuation, stopwords or capital letters.

We’ll use lambda to apply the function and store it as an additional column named “processed” in our data frame.def pre_process(sms):

remove_punct = "".join([word.lower() for word in sms if word not

in punctuation])

tokenize = nltk.tokenize.word_tokenize(remove_punct)

remove_stopwords = [word for word in tokenize if word not in

stopwords]

return remove_stopwordsdata['processed'] = data['sms'].apply(lambda x: pre_process(x))

print(data['processed'].head())>>> 0 [go, jurong, point, crazy, available, bugis, n...

>>> 1 [ok, lar, joking, wif, u, oni]

>>> 2 [free, entry, 2, wkly, comp, win, fa, cup, fin...

>>> 3 [u, dun, say, early, hor, u, c, already, say]

>>> 4 [nah, dont, think, goes, usf, lives, around, t...

Step 3: Categorizing and Counting Tokens

After we’ve split each SMS into word tokens, we can proceed with creating two different lists:

- Word tokens associated with spam messages.

- Word tokens associated with ham messages.def categorize_words():

spam_words = []

ham_words = []for sms in data['processed'][data['label'] == 'spam']:

for word in sms:

spam_words.append(word)

for sms in data['processed'][data['label'] == 'ham']:

for word in sms:

ham_words.append(word)return spam_words, ham_wordsspam_words, ham_words = categorize_words()print(spam_words[:5])

print(ham_words[:5])>>> ['free', 'entry', '2', 'wkly', 'comp']

>>> ['go', 'jurong', 'point', 'crazy', 'available']

Step 4: Predict Function

Now we can proceed with our predict function which will take a string of characters and determine whether it’s spam or not. We’ll evaluate the number of spam/ham-associated words and the number of their occurrences within each of the categorize_words() lists.

As many words would be associated both with spam and ham — it is very important to count their instances.

Please note, we’ll be calling the function in the next cell, as we still didn’t collect a string input from the user and pre-processed it so it can be used for prediction.def predict(sms):

spam_counter = 0

ham_counter = 0for word in sms:

spam_counter += spam_words.count(word)

ham_counter += ham_words.count(word)

print('***RESULTS***')if ham_counter > spam_counter:

accuracy = round((ham_counter / (ham_counter + spam_counter) *

100))

print('messege is not spam, with {}%

certainty'.format(accuracy))elif ham_counter == spam_counter:

print('message could be spam')else:

accuracy = round((spam_counter / (ham_counter + spam_counter)

* 100))

print('message is spam, with {}% certainty'.format(accuracy))

Step 5: Collecting User Input

The last step in our project would be the easiest of them all!

We’ll need to collect a string of words from the user, pre-process it and then finally pass them as input to our predict function!user_input = input(“Please type a spam or ham message to check if

our function predicts accurately\n”)

processed_input = pre_process(user_input)predict(processed_input)

Let’s say our user input is “CRA has important information for you, call 1–800–789–7898 now!”, will our function be able to recognize it’s a spam message?processed_input = pre_process(“CRA has important information for

you, call 1–800–789–7898 now!”)

predict(processed_input)>>> 'message is spam with 60% certainty'

Indeed out function was able to recognize there’s a bigger chance that the SMS is spam rather than ham!

Now, try running the code with your own input or perhaps use a different SMS Collection dataset or a different pre-processing function.

You can potentially stem or lemmatize the tokens or even keep the uppercase letters instead of removing them.

There are endless options for text manipulation and I highly encourage you to experiment as much as you can to achieve better results!

I hope you enjoyed this tutorial and found it helpful, please contact me if you have any questions or any suggestions for improvement.

How about a video tutorial?

Are you more of a video person? no problem! checkout this tutorial on my Youtube channel!

create a simple sms spam filter with Python video tutorial

Create a GUI for your Spam Filter:

Turn your raw code into a complete Python app with Dear PyGUI. This tutorial will show you how to do it from scratch! (beginner friendly)

Please refer to the improved GUI code on Github: https://github.com/MariyaSha/SimpleSMSspamFilter_GUI

create a Python GUI app video tutorial

External Links:

Google Colab Notebook (complete code)

Jupyter Notebook (complete code)

Adjusted Code for a Command Prompt Application

Contact Me:

Youtube: https://www.youtube.com/pythonsimplified

LinkedIn: www.linkedin.com/in/mariyasha888

Github: www.github.com/MariyaSha

Instagram: https://instagram.com/mariyasha888